Why Most AI Workflows Fail in SMBs? (And How to Make Them Actually Usable)

The real reasons AI workflows stall in SMBs

Small and medium-sized businesses (SMBs) typically operate with lean teams and juggle a range of tools. Unfortunately, that combination can break AI implementations much faster than any hype admits.

Tool sprawl leads to fragile handoffs between apps.

Messy data fills inconsistent fields, creating chaos for models.

Process debt is common, steps are tribal knowledge, rarely documented.

Unclear ownership means no one addresses drift or errors.

Security friction blocks access right when you need it most.

Misaligned incentives reward just output, not meaningful outcomes.

Lack of measurement hides both quality and cost per task.

Tool sprawl creates fragile handoffs

Consider a sales update that relies on a CRM, spreadsheets, wikis, and chat apps. With every handoff, critical context is lost. AI agents have to guess, and often guess wrong. If you’re planning to consolidate, check out this overview of all-in-one workspaces vs. specialized project tools for guidance.

Reduce the number of systems where the workflow operates.

Standardize naming conventions and statuses across tools.

Designate a single source of truth for each record type.

Log workflow events in one location for AI triggers.

Your data isn’t ready for automation

Machine Learning Models (LLMs) perform best with organized, clearly labeled data. But SMBs often have duplicate entries, ambiguous free text, and missing data owners.

Swap free text inputs for picklists that define stage, priority, and reason.

Specify required fields for each automated step.

Enforce correct formats with validation at the point of entry.

Implement a deduplication rule and apply it every week.

Maintain a golden record for each account to avoid fragmentation.

Label personally identifiable information (PII) and set access by user role.

Bad prompts? Usually bad process

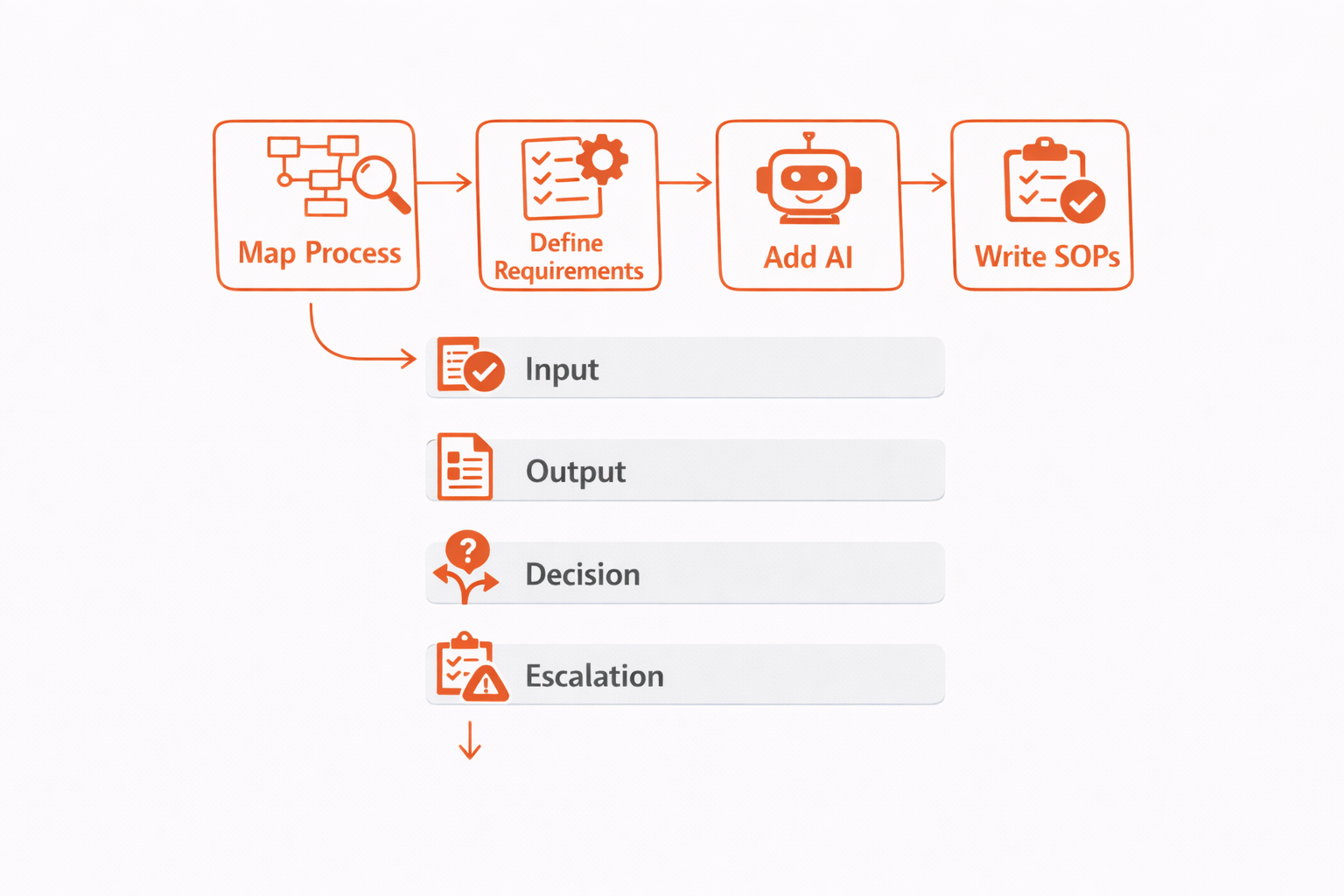

Improving prompts rarely solves deeper workflow issues. Start by mapping your process. Once the inputs and outputs are clear, add AI, with requirements it must pass.

Write Standard Operating Procedures (SOPs) the AI can pass, not prose it can mimic. Works better than relying on clever prompt engineering alone.

Input: Make required fields and formats explicit.

Output: Define length, structure, and intended audience.

Decision: Spell out when to act, ask for input, or escalate issues.

Escalation: Assign an owner and a service-level agreement (SLA) for follow-up.

Design a workflow that humans trust

Adoption hinges on trust. Build your system with readable errors and sensible defaults, so users feel supported rather than second-guessed.

Make AI failure modes visible

Display confidence scores and supporting evidence for every action.

Always cite data sources in AI-generated summaries.

Offer one-click undo and allow designated owners to override decisions.

Send low-confidence tasks to human team members for review.

Alert users to any drift in fields or intent detection.

Plan For SMB AI adoption

Begin with a small focus and make outcomes measurable at every step.

Days 0–30 Pick a single workflow with clear financial impact.

Establish a baseline or standard for the cycle time, error rate, and cost.

Consolidate critical fields and assign clear owners.

Release an initial version with oversight from human reviewers.

Days 31–60 Add retrieval from trusted knowledge sources.

Automate key handoffs using webhooks or built-in events.

Publish a runbook detailing failure points and service-level agreements (SLAs).

Days 61–90 Expand to a workflow closely related to or connected with the one you have been working on.

Add dashboards that show quality improvements and cost savings.

Remove manual steps that no longer contribute meaningful value.

Architecture that actually scales on SMB budgets

Keep your tech stack simple and prioritize stable connections between components.

Use a central workspace for CRM, project management, and company knowledge.

Connect applications with a basic event bus for workflow orchestration.

Keep retrieval light, index only the key fields that truly matter.

Mask PII and maintain logs for prompts and their respective outputs.

Opt for APIs that have straightforward quotas and transparent pricing models.

Governance that doesn’t slow the team

Policy should empower delivery, not hinder it. Use the NIST AI Risk Management Framework as a basic foundational standard for managing AI risks.

Define RACI (Responsible, Accountable, Consulted, Informed) roles for every automated decision.

Document how long you retain data and keep tenant boundaries clear.

Review both prompts and outputs for potentially sensitive terms.

Record every human override, along with the reason for the intervention.

Conduct a risk review on a quarterly basis.

Choose platforms that integrate your work, not add more tabs

Prioritize platforms that unify data across CRM, project management, and company knowledge. All-in-one workspaces like Routine or ClickUp are strong options here. For CRM, HubSpot and Pipedrive are popular, while project teams might lean toward Asana or Monday. Let your unique workflows, rather than flashy brands, guide your platform choices.

Use a unified system for managing accounts, contacts, deals, tasks, and documents.

Enable native automations and webhooks to minimize manual work.

Apply field-level permissions and keep audit logs for compliance.

Evaluate integrations based on what your business actually uses.

Look for transparent pricing structures and defined usage caps.

Metrics that prove value, not vanity

Track the actual results of the work, rather than focusing on its reputation or buzz in the industry.

Calculate cost for each completed task.

Measure first-response time for AI-assisted tasks or support cases.

Track how often tasks succeed without needing human intervention.

Monitor the rate of human overrides, broken down by workflow.

Check hallucination rate on a sample of AI outputs.

Document revenue increases tied to specific AI-driven playbooks.

Review these numbers in a concise one-page report every week, then decide what needs to change next.

Playbook: turn one broken flow into a durable win

Name the workflow and specify its owner.

Write a single-sentence statement of the desired outcome.

Map all inputs, systems, and handoffs involved.

Create clear data contracts for what’s expected as input and output.

Define acceptance criteria and SLAs for the workflow.

Build an initial version with human review in place.

Deploy to a test group of ten users and observe results.

Eliminate steps that don’t add measurable value.

Make it boring, then scale

The best AI feels almost mundane, it works quietly, hits SLAs, and removes repetitive toil. Start with a straightforward, reliable workflow. Once it runs smoothly, repeat the formula across the rest of your business for consistent and steady impact.

FAQ

Why do AI workflows often break down in small and medium-sized businesses?

AI workflows in SMBs frequently fail due to tool sprawl and messy data, which create fragile systems. Without a clear structure and ownership, AI efforts quickly become chaotic, leading to performance issues.

How can SMBs combat tool sprawl in their AI implementations?

SMBs should focus on consolidating systems, standardizing conventions, and centralizing sources of truth to combat tool sprawl. Eliminating redundant tools can significantly stabilize AI workflows.

What role does data preparation play in successful AI automation?

Data preparation is critical; poorly organized data leads to ineffective AI solutions. Implementing formats, deduplication rules, and labeling ensures the AI has reliable data to work with.

Why is documenting processes important for AI adoption?

Undocumented tribal knowledge leads to misinformed AI decisions. Having clear SOPs ensures AI processes are predictable and correct, making them reliable and easier to scale.

How can businesses ensure AI decisions are trusted by the team?

Trust in AI decisions grows when error modes are transparent, and there are mechanisms for human oversight. Implementing a system with assessable confidence scores and user-friendly overrides boosts trust.

What metrics are essential for evaluating AI effectiveness in SMBs?

Focus on tangible results like cost per completed task and AI accuracy rather than industry buzz. These metrics reveal the true impact of AI on operations and guide necessary adjustments.

What are common governance issues in AI deployments?

Failures often stem from a lack of defined roles and clear policy. Governance should facilitate AI deployment, not impede it, with roles and responsibilities clearly delineated.

How should SMBs approach AI architecture on a budget?

SMBs should aim for simplicity and stability in their tech stack by selecting platforms that integrate well and provide straightforward APIs. Avoid complex setups that don’t yield proportional value.

Why is focusing on mundane, reliable AI important before scaling?

AI that works seamlessly and meets SLAs quietly is more valuable than flashy solutions. Starting with reliable workflows ensures a steady baseline that can be extended across the business.